Artificial intelligence has revolutionized the way we create, interpret, and interact with visual content. Among the most groundbreaking advancements in this space is OpenAI’s DALL·E, an AI model capable of generating highly detailed and creative images from textual descriptions. Whether you’re an artist, designer, or simply curious about the technology, this guide will walk you through the fascinating inner workings of DALL·E, step by step.

What Is DALL·E? A Summary

DALL·E is a text-to-image generation model that takes natural language descriptions as input and generates high-quality images as output. Named as a playful blend of Salvador Dalí (the surrealist artist) and Pixar’s WALL·E, the model can produce highly creative and imaginative visuals based on textual cues. For example, you could ask DALL·E to create “a futuristic cityscape with flying cars” or “a panda painting a masterpiece” and receive stunning visuals in return.

How It Works in a Nutshell

- Text Input: The user provides a textual description of the desired image.

- Text Encoding: The model converts this description into numerical representations using a text encoder.

- Image Generation: Using a diffusion process, the model starts with random noise and iteratively refines it into a coherent image based on the encoded text.

- Output: The final image is presented, reflecting the user’s input description.

Foundational Concepts of DALL·E

Before diving into the step-by-step process, let’s understand the key components and concepts that make DALL·E possible:

- Transformer Models: These are the backbone of modern AI, excelling at understanding and generating sequences, whether text or other data types.

- Diffusion Models: A type of generative model that creates images by starting with noise and refining it step by step.

- Text Embeddings: Numerical representations of text that capture its meaning, enabling the model to relate language to visual concepts.

Step-by-Step Breakdown of DALL·E’s Image Generation Process

Let’s break down the entire process into detailed steps to understand how DALL·E transforms your words into stunning visuals.

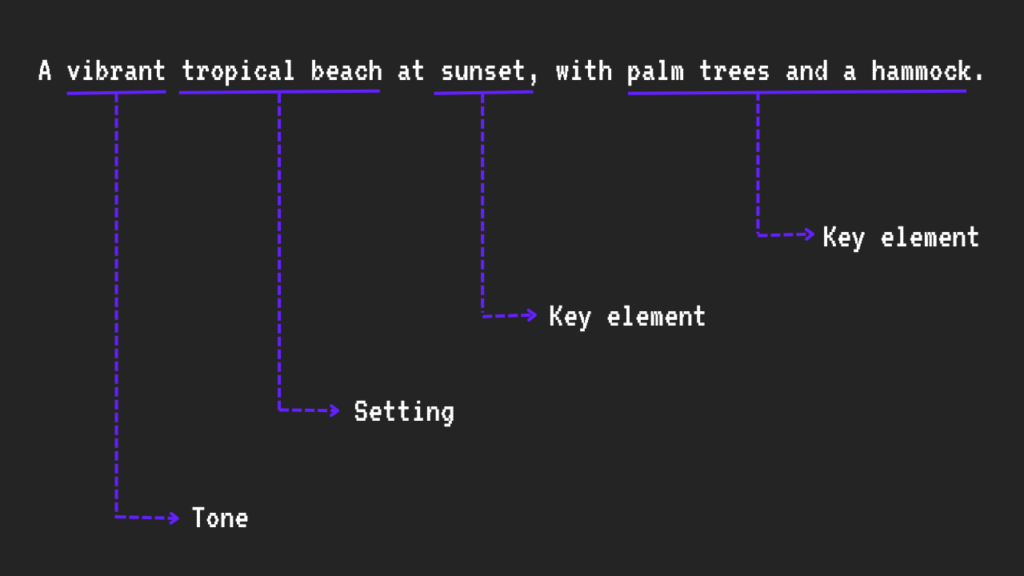

1. Text Input

The process begins with a textual description provided by the user. For instance:

"A vibrant tropical beach at sunset, with palm trees and a hammock."

DALL·E uses this information to craft an image that aligns with the description.

2. Text Encoding

To process the textual input, DALL·E employs a text encoder based on Transformer models. The encoder converts the input text into a numerical representation called an embedding.

Key Insight: The embedding captures the semantic meaning of the text, enabling the model to understand what “a tropical beach” should look like.

3. Generating an Initial Image

Here’s where the magic begins. DALL·E’s image generation process starts with random noise. Imagine a blank canvas filled with static-like specks. This noise serves as the starting point for creating the image.

4. The Diffusion Process

DALL·E relies on a diffusion model to gradually transform the random noise into a meaningful image. This process involves the following steps:

- Starting with Noise: The model begins with a completely random noise image.

- Iterative Refinement: Over multiple steps, the model removes noise, guided by an optimization process that minimizes the difference between the current image and the ideal image implied by the text embedding. At each step, the model assesses the consistency between the image details and the text embedding, iteratively refining the image to better align with the textual description.

How It Knows When to Stop? The model is designed to stop the refinement process when changes between consecutive steps fall below a certain threshold, indicating that further refinement would not significantly improve the image. This threshold is determined during training and ensures the process concludes efficiently without overfitting or unnecessary detail addition.

- Conditioning on Text: The refinement process is continuously guided by the text embedding at every step, ensuring that each modification to the image aligns with the semantics and details specified in the original description.

5. Training the Model

DALL·E’s ability to generate realistic images comes from extensive training on a dataset containing millions of image-text pairs. During training:

- The model learns to associate textual descriptions with corresponding visual elements.

- It minimizes errors in predicting how an image should look based on text.

6. Outputting the Final Image

After completing the diffusion process, the model outputs a high-resolution image. The result is a visually coherent and detailed depiction of the user’s text input.

For example, given the prompt:

The output might feature:

- Skyscrapers with futuristic architecture.

- Flying cars zooming through the air.

- A vibrant color palette dominated by neon tones.

Advantages of DALL·E

DALL·E’s capabilities extend beyond simple image generation. Here are some notable benefits:

- Creativity: The model can produce imaginative and surreal visuals.

- Customizability: Users can specify detailed prompts to tailor the output.

- Efficiency: Complex visuals can be generated in seconds.

Challenges and Ethical Considerations

While DALL·E is a powerful tool, it raises several challenges:

- Bias: The model may reflect biases present in its training data.

- Misuse: There’s potential for generating harmful or misleading content.

- Copyright Concerns: Training on datasets containing copyrighted images poses legal and ethical questions.

Conclusion

DALL·E represents a significant leap forward in AI-driven creativity, blending advanced machine learning techniques like Transformers and Diffusion Models to turn words into visuals. By understanding its inner workings, we can better appreciate the technology’s potential and responsibly harness its power.

Whether you’re looking to create art, visualize ideas, or explore new creative possibilities, DALL·E stands as a testament to the incredible potential of AI in reshaping our interaction with the visual world.